说明

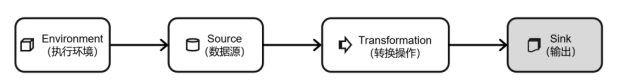

CDC 是 Change Data Capture(变更数据获取)的简称。核心思想是,监测并捕获数据库的变动(包括数据或数据表的插入、更新以及删除等),将这些变更按发生的顺序完整记录下来,写入到消息中间件中以供其他服务进行订阅及消费。

Flink CDC 是一个独立的开源项目,项目代码托管在 GitHub 上。Flink 社区开发了 flink-cdc-connectors 组件,这是一个可以直接从 MySQL、PostgreSQL 等数据库直接读取全量数据和增量变更数据的 source 组件。

Github:https://github.com/ververica/flink-cdc-connectors

文档:https://ververica.github.io/flink-cdc-connectors/

代码示例

1. 软件版本

jdk 1.8

flink 1.15.0

flinkcdc 2.4.0

2. 项目依赖

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>flink-job</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<flink.version>1.15.0</flink.version>

<flinkcdc.version>2.4.0</flinkcdc.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-base</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-common</artifactId>

<version>1.15.0</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>${flinkcdc.version}</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-postgres-cdc</artifactId>

<version>${flinkcdc.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.68</version>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<version>42.6.0</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.20</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-core</artifactId>

<version>1.2.3</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-access</artifactId>

<version>1.2.3</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>1.2.3</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>3.1.2</version>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

3. MySQLCDC代码

package job.demo;

import com.ververica.cdc.connectors.mysql.source.MySqlSource;

import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class MySQLCDC {

public static void main(String[] args) throws Exception {

MySqlSource<String> mySqlSource = MySqlSource.<String>builder()

.hostname("192.168.100.101")

.port(3306)

.databaseList("test") // 设置捕获的数据库, 如果需要同步整个数据库,请将 tableList 设置为 ".*".

.tableList("test.posts") // 设置捕获的表

.username("root")

.password("密码")

.deserializer(new JsonDebeziumDeserializationSchema()) // 将 SourceRecord 转换为 JSON 字符串

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置 3s 的 checkpoint 间隔

env.enableCheckpointing(3000);

env

.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL Source")

// 设置 source 节点的并行度为 4

.setParallelism(4)

.print().setParallelism(1); // 设置 sink 节点并行度为 1

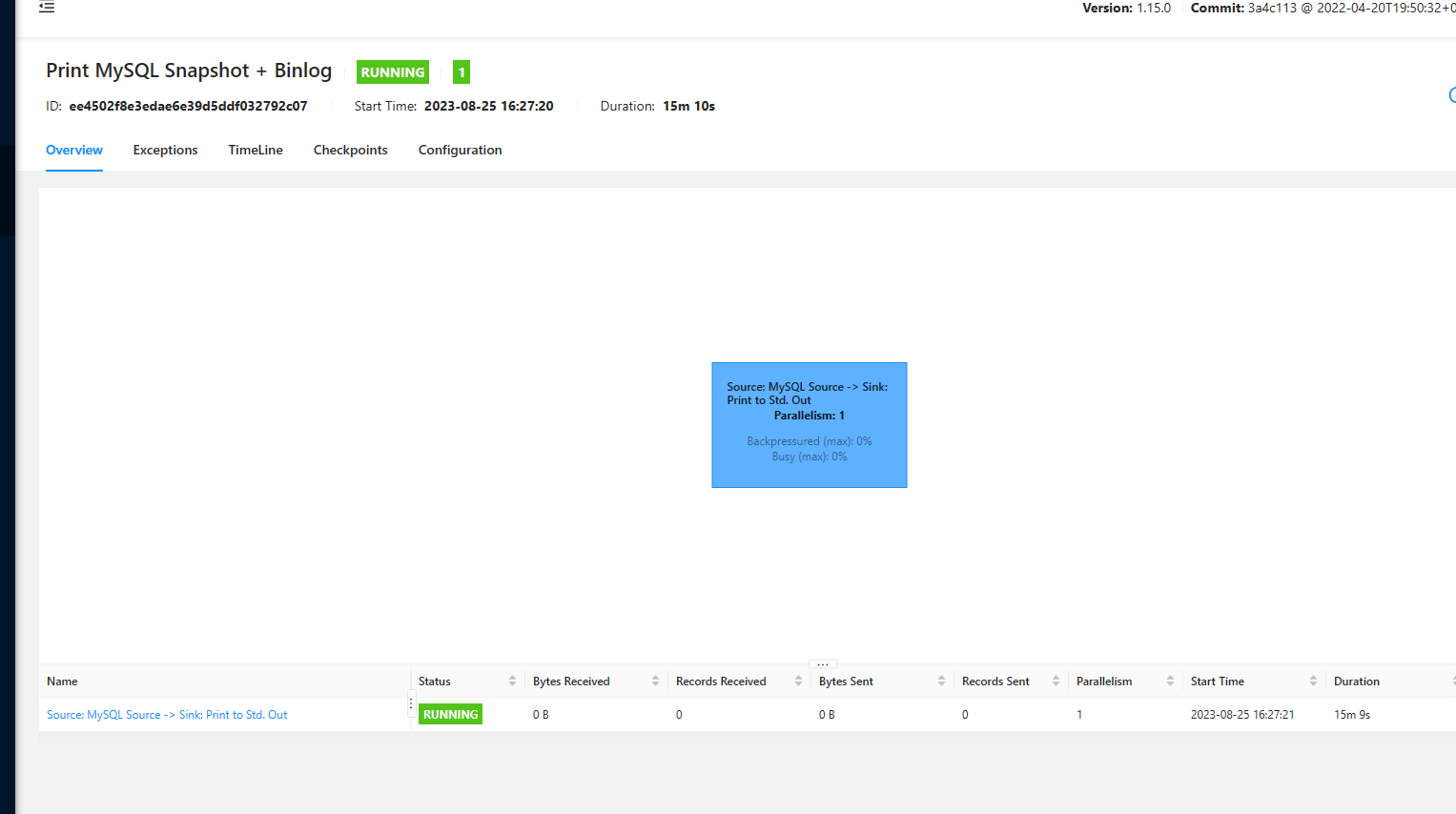

env.execute("Print MySQL Snapshot + Binlog");

}

}

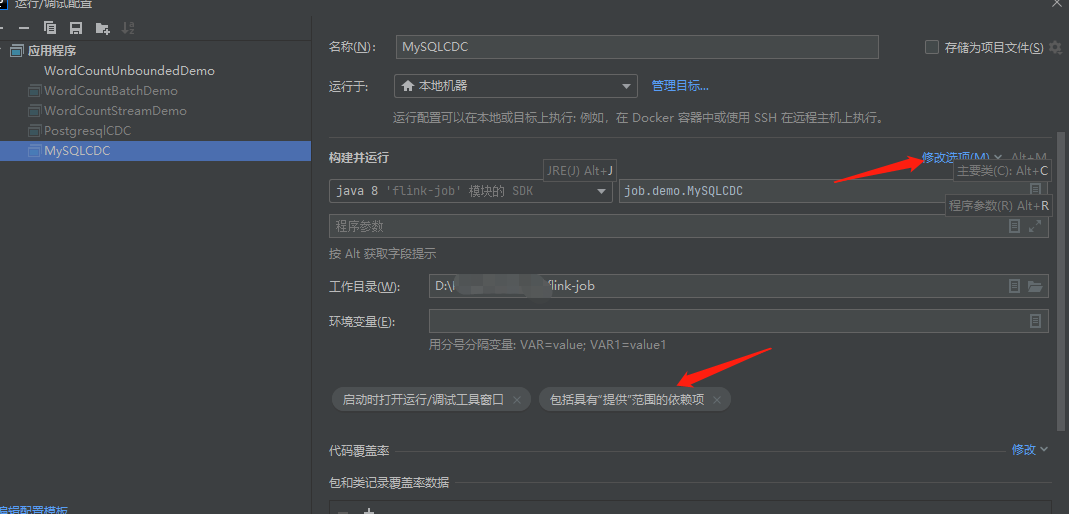

4. 运行

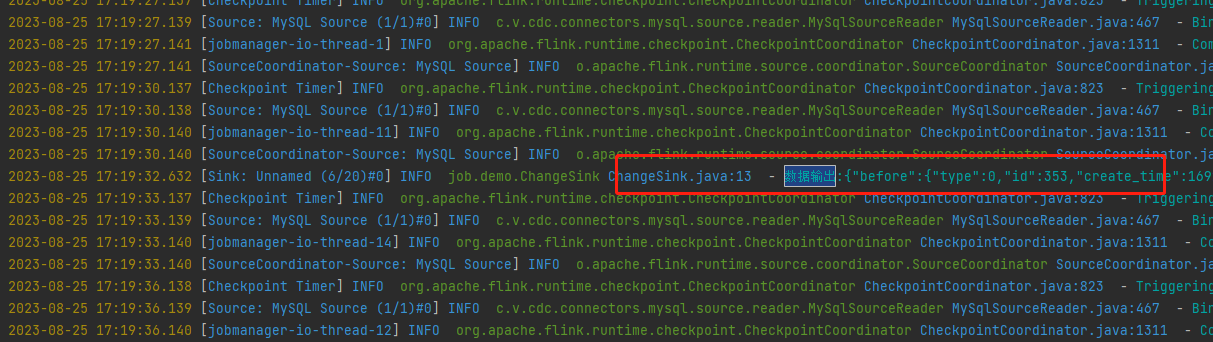

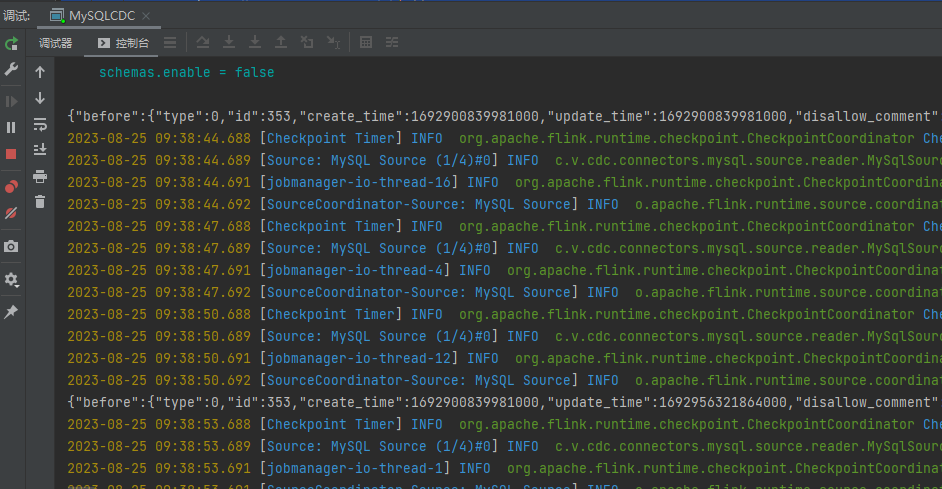

5. 本地运行结果

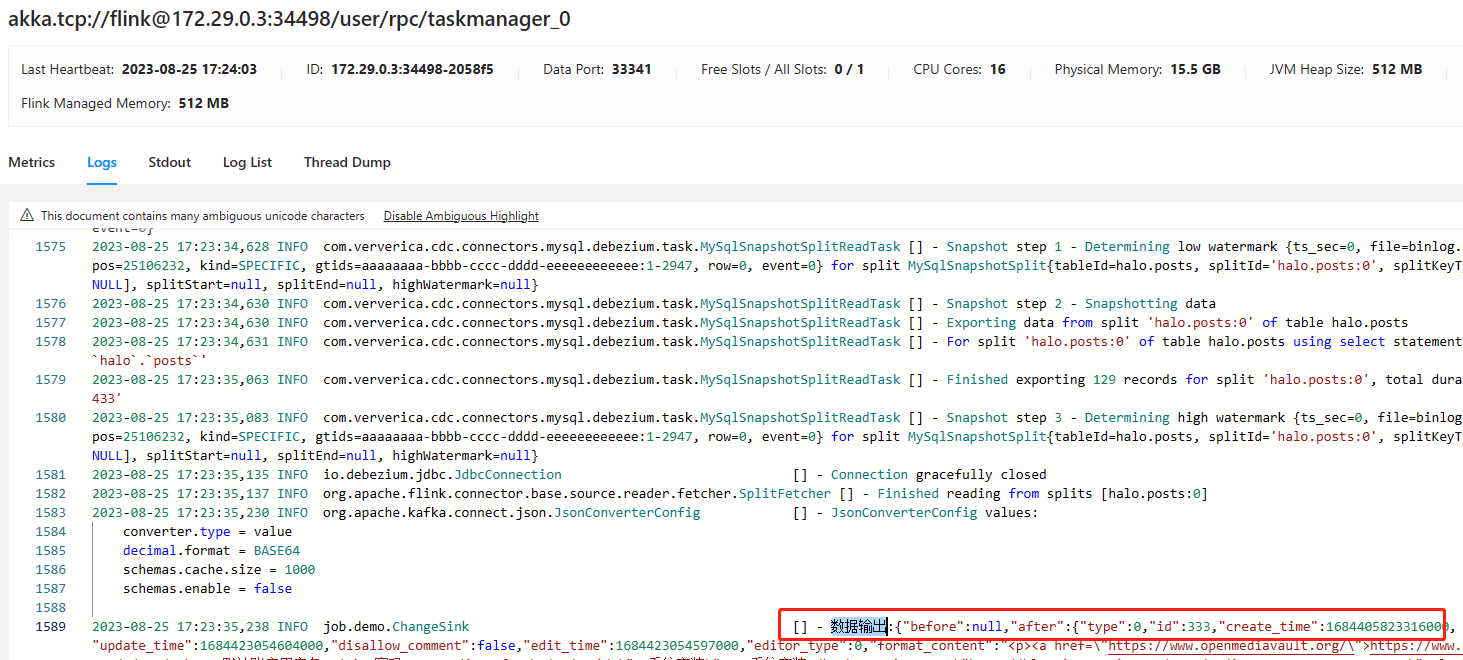

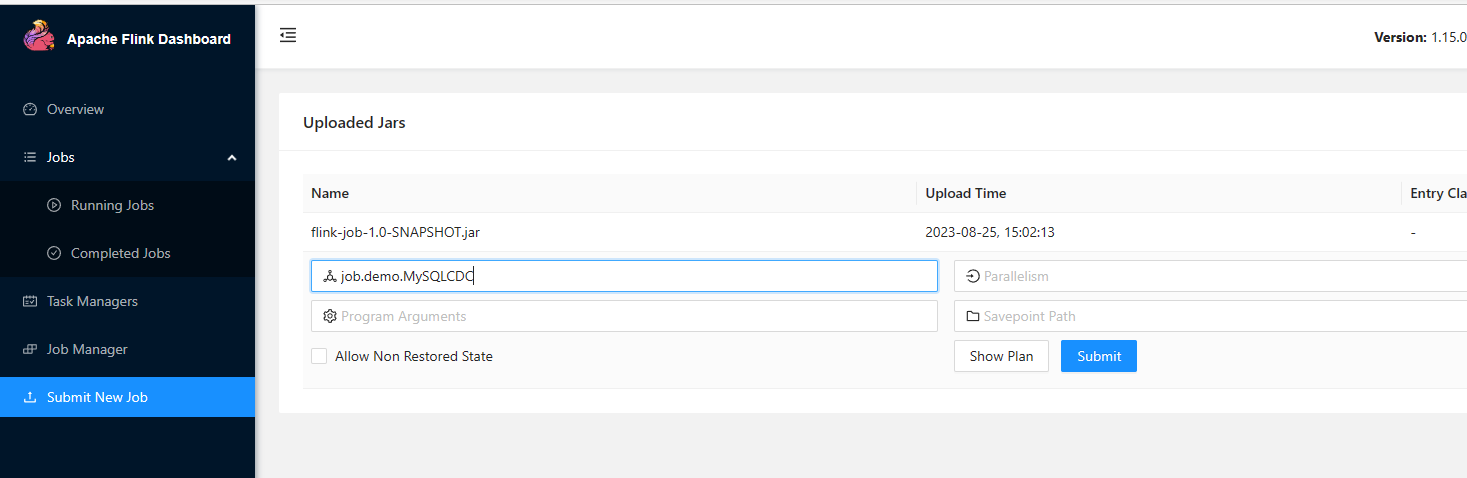

6. 部署到flink集群

jar打包时,要将cdc的依赖一起打包

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>3.1.2</version>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

单节点flink,注意修改代码中的并行度

env

.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL Source")

// 设置 source 节点的并行度为 4

.setParallelism(1)

.print().setParallelism(1); // 设置 sink 节点并行度为 1

上传jar包,执行类

其它

1.自定义sink

MySQLCDC.java

import com.ververica.cdc.connectors.mysql.source.MySqlSource;

import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class MySQLCDC {

public static void main(String[] args) throws Exception {

MySqlSource<String> mySqlSource = MySqlSource.<String>builder()

.hostname("192.168.100.101")

.port(3306)

.databaseList("test") // 设置捕获的数据库, 如果需要同步整个数据库,请将 tableList 设置为 ".*".

.tableList("test.posts") // 设置捕获的表

.username("root")

.password("密码")

.deserializer(new JsonDebeziumDeserializationSchema()) // 将 SourceRecord 转换为 JSON 字符串

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置 3s 的 checkpoint 间隔

env.enableCheckpointing(3000);

DataStreamSource<String> streamSource = env.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL Source")

// 设置 source 节点的并行度为 4

.setParallelism(1);

// 使用自定义sink

streamSource.addSink(new ChangeSink());

env.execute("Print MySQL Snapshot + Binlog");

}

}

ChangeSink.java

import org.apache.flink.streaming.api.functions.sink.SinkFunction;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class ChangeSink implements SinkFunction<String> {

private final Logger logger = LoggerFactory.getLogger(getClass());

@Override

public void invoke(String value, Context context) throws Exception {

logger.info("数据输出:"+value);

SinkFunction.super.invoke(value, context);

}

}